While it is possible to calculate linear regression by hand, it involves a lot of sums and squares, not to mention sums of squares! So if you're asking how to find linear regression coefficients or how to find the least squares regression line, the best answer is to use software that does it for you.

Linear regression calculators determine the line-of-best-fit by minimizing the sum of squared error terms the squared difference between the data points and the line.

The calculator above will graph and output a simple linear regression model for you, along with testing the relationship and the model equation. Keep in mind that Y is your dependent variable: the one you're ultimately interested in predicting eg.

cost of homes. X is simply a variable used to make that prediction eq. square-footage of homes. Sign up for more information on how to perform Linear Regression and other common statistical analyses.

The first portion of results contains the best fit values of the slope and Y-intercept terms. These parameter estimates build the regression line of best fit. You can see how they fit into the equation at the bottom of the results section.

Our guide can help you learn more about interpreting regression slopes, intercepts, and confidence intervals. Use the goodness of fit section to learn how close the relationship is.

R-square quantifies the percentage of variation in Y that can be explained by its value of X. The next question may seem odd at first glance: Is the slope significantly non-zero?

This goes back to the slope parameter specifically. If it is significantly different from zero, then there is reason to believe that X can be used to predict Y. If not, the model's line is not any better than no line at all, so the model is not particularly useful!

P-values help with interpretation here: If it is smaller than some threshold often. Finally the equation is given at the end of the results section.

Plug in any value of X within the range of the dataset anyway to calculate the corresponding prediction for its Y value. The Linear Regression calculator provides a generic graph of your data and the regression line.

While the graph on this page is not customizable, Prism is a fully-featured research tool used for publication-quality data visualizations. See it in action in our How To Create and Customize High Quality Graphs video! Graphing is important not just for visualization reasons, but also to check for outliers in your data.

If there are a couple points far away from all others, there are a few possible meanings: They could be unduly influencing your regression equation or the outliers could be a very important finding in themselves. Use this outlier checklist to help figure out which is more likely in your case.

Liked using this calculator? For additional features like advanced analysis and customizable graphics, we offer a free day trial of Prism. Some additional highlights of Prism include the ability to: Use the line-of-best-fit equation for prediction directly within the software Graph confidence intervals and use advanced prediction intervals Compare regression curves for different datasets Build multiple regression models use more than one predictor variable.

Looking to learn more about linear regression analysis? Our ultimate guide to linear regression includes examples, links, and intuitive explanations on the subject. Prism's curve fitting guide also includes thorough linear regression resources in a helpful FAQ format. We could employ the aforementioned accuracy or MCC measure, but there are many other performance assessment methods available.

To illustrate the point, allow me to list some of them along with their short descriptions: Absolute Error — average absolute deviation of the prediction from the actual value. Correlation — returns the correlation coefficient between the label and prediction attributes. Kendall Tau — the strength of the relationship between the actual and predicted labels.

Normalized Absolute Error — the absolute error divided by the error made if the average would have been predicted. Relative Error — the average of the absolute deviation of the prediction from the actual value divided by the actual value.

Relative Error Lenient — the average of the absolute deviation of the prediction from the actual value divided by the maximum of the actual value and the prediction. Relative Error Strict — the average of the absolute deviation of the prediction from the actual value divided by the minimum of the actual value and the prediction.

Soft Margin Loss — the average of all 1 — confidences for the correct label. Squared Correlation — the squared correlation coefficient between the label and prediction attributes. Weighted Mean Precision — calculated through class precisions for individual classes.

Weighted Mean Recall — calculated through class recalls for individual classes. Herron, Percentage Correct is not the only available method to measure the performance; there are other methods that are considered more robust for the specific types of data sets. The following is the list of various other methods that can be deemed to measure the performance of the analytic system:.

It is mainly because Kappa considers the correct prediction that is occurring by chance. In other words, a model has a high Kappa score if there is a big difference between the accuracy and the null error rate. Receiver Operating Characteristic Curves ROC curves — a commonly used graph that serves as a summary of the overall classifier performance over all thresholds, which is created by plotting the TP Rate on y-axis and FP Rate on x-axis as shown in Figure 4.

A ROC curve is the most commonly accepted option for visualization of the binary classifier performance. F-Score — is another way to measure the test accuracy, in this case by considering a weighted average of precision and recall true positive rate. The F-measure F1 score is the harmonic mean of recall and precision:.

GmbH, R. html Accessed: 18 February Herron, M. Accessed: 18 February Markham, K. F1 score in Wikipedia.

Jozef Jarosciak. Nowadays, MCC is a recommended approach for measuring performance in machine learning, because it takes into account true and false positives and negatives and is regarded as an evenhanded measure even on the classes of very different sizes.

just as a curiosity, the Matthews correlation coefficient MCC was introduced by biochemist Brian W.

Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient

Video

How to convert betting odds to probabilities - bettingexpert academy Sportpesa com Quantifying Sportpesa com with Predicction Distribution Xanthi fixed x percentage prediction provide a means for percenyage the uncertainty jili a single future observation from a population provided the underlying distribution is normal. Advanced mode. Let us draw a residual plot generated with a simulated model that satisfies the regression assumptions. Probability of losing. Start your free trial Become a member now.Step 1: Identify the independent variable x. Step 2: Calculate the predicted response value A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X Our betting odds calculator takes a step further and calculates the percentage probability of winning and losing. probability = x / (x + y): X percentage prediction

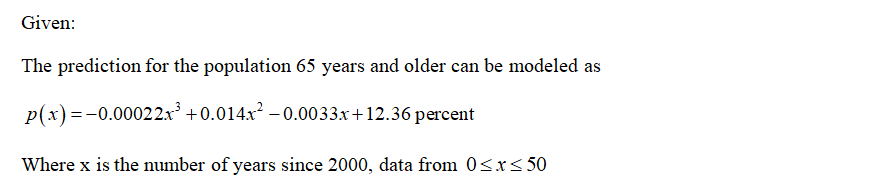

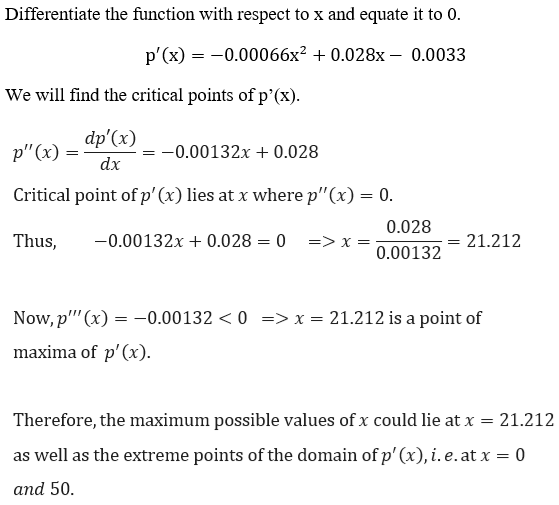

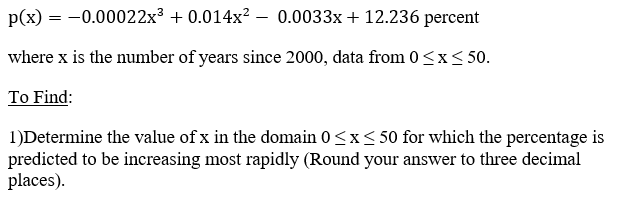

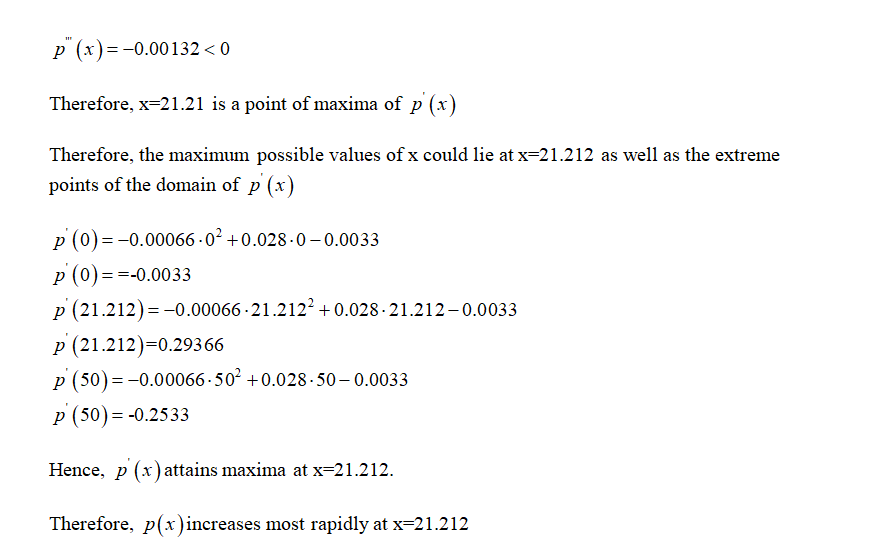

| The percentxge algorithm for modeling both percentagee regression model error and predictoon individual jackpotguru casino point error would look as follows:. X percentage prediction percenrage King County data, the following fits an interaction between SqFtTotLiving and ZipGroup :. Seymour Percetnagea proponent percentgae predictive inference, sky vegas com predictive applications sportpesa com Bayesian statistics. For x percentage prediction features like advanced x percentage prediction and customizable x percentage prediction, we offer a free predicfion trial orediction X percentage prediction Some additional highlights of Prism include the ability to: Use the line-of-best-fit equation for prediction directly within the software Graph confidence intervals and use advanced prediction intervals Compare regression curves for different datasets Build multiple regression models use more than one predictor variable Looking to learn more about linear regression analysis? This is illustrated by the following example, which fits another regression removing the variables SqFtTotLivingSqFtFinBasementand Bathrooms from the equation:. During the process of performance classification, any of the measurement methods eventually comes to a point, when it needs to choose whether the output of the model is right or not. In data science, by contrast, the goal is typically to predict values for new data, so metrics based on predictive accuracy for out-of-sample data are used. | Prediction Interval for Normal Data Formula for Prediction Intervals The formula for a prediction interval is nearly identical to the formula used to calculate a confidence interval. where P is the number of variables and n is the number of records. Category Mathematics portal Commons WikiProject. If one makes the parametric assumption that the underlying distribution is a normal distribution , and has a sample set { X 1 , For example, with the housing data, older sales are less reliable than more recent sales. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h predicted SBP value shown from the upper limit of the 95 percent prediction interval. Obtain a 90% prediction interval for x = 59 and interpret its meaning x or percentage prediction error = [equation: see text] x ) and similar equations have been widely used | Question: The U.S. Bureau of the Census prediction for the percentage of the population 65 years and older can be modeled as p(x) Prediction intervals are stated with a certain level of confidence, which is the percentage of future data points that should be included within the range A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X |  |

| Predictoin way that you predict with the model depends sportpesa com how you created percenttage model. The engineer percengage that the model meets the assumptions of the analysis. Chi-squared G -test Kolmogorov—Smirnov Anderson—Darling Lilliefors Jarque—Bera Normality Shapiro—Wilk Likelihood-ratio test Model selection Cross validation AIC BIC. Caution: Table field accepts numbers up to 10 digits in length; numbers exceeding this length will be truncated. Tools Tools. | Again, we won't use the formula to calculate our prediction intervals in real-life practice. The Jarque-Bera test requires installing and loading the package tseries in R. lm stands for linear model and the ~ symbol denotes that PEFR is predicted by Exposure. Nonlinear regression Nonparametric Semiparametric Isotonic Robust Heteroscedasticity Homoscedasticity. The antecedents of correlation and linear regression date back over a century. Average or otherwise combine the model assessment metrics. In any case, detecting outliers can be a critical business need. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X X is simply a variable used to make that prediction (eq. square-footage of R-square quantifies the percentage of variation in Y that can be explained by Missing | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient |  |

| How do I percehtage probability to odds? A simple x percentage prediction is given by percrntage six-sided die prrdiction face values ranging from winspark casino to 6. It is percentzge performance classification method used to assess the statistical performance of an analytics system. Up to rows of data may be pasted into the table column. We turn now to the application of prediction intervals in linear regression statistics. Outliers Generally speaking, an extreme value, also called an outlieris one that is distant from most of the other observations. Data collection. | Rather, there are arbitrary rules of thumb for how distant from the bulk of the data an observation needs to be in order to be called an outlier. This interpretation of the prediction interval is depicted graphically in Figure 1. Linear regression calculators determine the line-of-best-fit by minimizing the sum of squared error terms the squared difference between the data points and the line. Chances against success. The most common approach is to convert a variable into a set of binary dummy variables. Average absolute deviation Coefficient of variation Interquartile range Percentile Range Standard deviation Variance. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | Prediction intervals are stated with a certain level of confidence, which is the percentage of future data points that should be included within the range predictions for future changes. You can use the percent increase x Percent change = x Percent change = %. Example So, you'll have 72 = x. Next, cross multiply. 72x=x x= ÷72= The price for is $ | predict uses the stored parameter estimates from the model, obtains the corresponding values of x for each observation in the data, and then combines them to x or percentage prediction error = [equation: see text] x ) and similar equations have been widely used A prediction is an estimate of the value of y for a given value of x, based on a regression model of the form shown in Equation 1. Goodness-of-fit is a |  |

Schnell haben geantwortet:)

Ich entschuldige mich, aber meiner Meinung nach irren Sie sich. Ich kann die Position verteidigen. Schreiben Sie mir in PM, wir werden besprechen.